Caffe in Google Colab (2021)

I figured it would be nice to get caffe deep learning framework running in google colab. Why?

- Colab is a modified jupyter-notebook engine that runs on powerful GPUs like Nvidia Tesla T4, Tesla v100 or TPU for free or almost free (10usd/month for Pro subscription). Despite all the shortcomings of the development on js-based IDE in an internet browser, and the possibility of being randomly temporarily kicked-of from it due to exceeding compute quota that is actually kept secret from the user, it’s extremely convenient for fast prototyping, starting new projects, practicing etc.

- Caffe is a deep learning framework that is somewhat less known compared to TensorFlow (keras) / PyTorch, but when it comes to deploying models on edge (embedded devices like custom boards, Qualcomm snapdragon, Intel movidius, custom chips dedicated to neural networks processing etc) it might have the largest market share. There are two reasons: It’s easy to modify (the code is easy to read and has little abstraction/boilerplate) and it has a very convenient separation of model architecture (.prototxt) and the rest of the code.

Anyway, It was supposed to take me 5 minutes, and I’ve ended up devoting a few evenings for setting it up, so I figured I’ll share my setup, and the resulting notebook (colab | github).

Let’s start with official tutorial. They’ve recently added packages that allow installation with apt-get, but than we lose the possibility to modify framework’s code and rebuild. But let’s try to at least use their dependencies install:

sudo apt build-dep caffe-cuda

# dependencies for CUDA version

#It requires a deb-src line in your sources.list.This didn’t work for me, with:

Picking 'caffe-contrib' as source package instead of 'caffe-cuda'

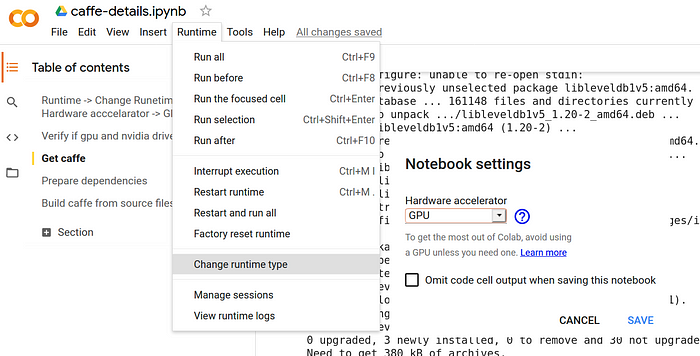

E: Unable to find a source package for caffe-cudaSo I figured I will just do compilation and solve all the issues as they arise. That’s the most universal approach anyway and gives the most control over what is actually happening. But first we will need to click Runtime->Change runtime type -> select GPU.

This will prevent various errors such us: lack of cuda, cuda-toolkit, errors while trying to manually install these, crashing nvidia-smi command, or after the seemingly successful compilation:

common.cpp:152] Check failed: error == cudaSuccess (100 vs. 0) no CUDA-capable device is detectedCompilation

If you are doing this in bash, ignore the ! and % signs — they are for jupyter and colab. Let’s clone caffe, get the example Makefile (that tells linker where to look for the libraries and where the output binaries are to be stored) and let’s run make to start compilation!

!git clone https://github.com/BVLC/caffe.git!git reset --hard 9b891540183ddc834a02b2bd81b31afae71b2153

# reset to the newest revision that worked OK on 27.03.2021

%cd caffe

!make clean

#just in case you already tried to build caffe by yourself

!cp Makefile.config.example Makefile.config

!make -j 4

#if you ommit 4 complation will be faster but colab may lack ramThere are multiple errors that you can get depending on what is actually installed by default on colab. Next paragraphs will come in error-solution manner:

nvcc fatal : Unsupported gpu architecture 'compute_20'This means that our makefile requires build that supports very old gpus (compute capability 20 means GeForce 4xx and 5xx series ‘Fermi’), but current versions of our cuda dependencies aren’t able to handle such build anymore. Lets delete out the corresponding line. Let’s use sed, so that we don’t even have to open the text editor:

!sed -i 's/-gencode arch=compute_20/#-gencode arch=compute_20/' Makefile.config #old cuda versions won't compileTo solve lack of gflags (library for parsing command line arguments) or lack of glog (a cool library for printing stuff during debugging, but with more control) errors:

./include/caffe/common.hpp:5:10: fatal error: gflags/gflags.h: No such file or directoryNVCC src/caffe/layers/sigmoid_layer.cu

In file included from ./include/caffe/blob.hpp:8:0,

from ./include/caffe/filler.hpp:10,

from src/caffe/layers/bias_layer.cu:3:

./include/caffe/common.hpp:6:10: fatal error: glog/logging.h: No such file or directory

#include <glog/logging.h>

^~~~~~~~~~~~~~~~

Execute:

!sudo apt-get install libgflags2.2

!sudo apt-get install libgflags-dev

!sudo apt-get install libgoogle-glog-dev

!make all -j 4For:

In file included from .build_release/src/caffe/proto/caffe.pb.cc:5:0:

.build_release/src/caffe/proto/caffe.pb.h:9:10: fatal error: google/protobuf/stubs/common.h: No such file or directory

#include <google/protobuf/stubs/common.h>

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

compilation terminated.

Makefile:598: recipe for target '.build_release/src/caffe/proto/caffe.pb.o' failed

make: *** [.build_release/src/caffe/proto/caffe.pb.o] Error 1

make: *** Waiting for unfinished jobs....

In file included from ./include/caffe/blob.hpp:9:0,

from src/caffe/blob.cpp:4:

.build_release/src/caffe/proto/caffe.pb.h:9:10: fatal error: google/protobuf/stubs/common.h: No such file or directory

#include <google/protobuf/stubs/common.h>…you lack protobuf, which is a language of describing data (like json, only less volatile), dependency install should do the trick:

!sudo apt-get install libprotobuf-dev protobuf-compiler

!make all -j 4If you get:

In file included from src/caffe/util/db_lmdb.cpp:2:0:

./include/caffe/util/db_lmdb.hpp:8:10: fatal error: lmdb.h: No such file or directory

#include "lmdb.h"

^~~~~~~~

compilation terminated.

Makefile:591: recipe for target '.build_release/src/caffe/util/db_lmdb.o' failedInstall lmdb (library that supports fast database for storing training data).

!sudo apt-get install liblmdb-devSimilarly for:

CXX src/caffe/util/db_leveldb.cpp

In file included from src/caffe/util/db_leveldb.cpp:2:0:

./include/caffe/util/db_leveldb.hpp:7:10: fatal error: leveldb/db.h: No such file or directory

#include "leveldb/db.h"

^~~~~~~~~~~~~~

compilation terminated.

Makefile:591: recipe for target '.build_release/src/caffe/util/db_leveldb.o' failed

make: *** [.build_release/src/caffe/util/db_leveldb.o] Error 1Get leveldb:

!sudo apt-get install libleveldb-devNever-ending story with hdf5

And now support for hdf5 (format that is often used for storing neural network weights) crashes:

NVCC src/caffe/layers/lstm_unit_layer.cu

NVCC src/caffe/layers/hdf5_data_layer.cu

src/caffe/layers/hdf5_data_layer.cu:10:10: fatal error: hdf5.h: No such file or directory

#include "hdf5.h"

^~~~~~~~

compilation terminated.So let’s try to get the dependencies:

!sudo apt-get install libhdf5–10

!sudo apt-get install libhdf5-serial-dev

!sudo apt-get install libhdf5-dev

!sudo apt-get install libhdf5-cpp-11…and we still get the same error. And matters start to complicate here.

First of all, libhdf5–10 or libhdf5-cpp-11 may be unavailable.

You can try libhdf5–100 and libhdf5-cpp-100 (they work fine) or try

!sudo apt-get search libhdf5To find suitable candidate. But that is not the end of it — caffe doesn’t see hdf5 even if it’s installed.

There are three more things to do (enviroment variables are temporary, symlinks don’t move or delete anything, so you are relatively safe even if you do it on your local/remote machine instead of colab), and it won’t work unless you execute all three.

First look where the libs are stored on your machine:

!find /usr -iname "*hdf5.so"

# I've got: /usr/lib/x86_64-linux-gnu/hdf5/serial

!find /usr -iname "*hdf5_hl.so"Than create symlinks (they work as shortcuts, aliases), because caffe expects hdf5 naming without the ‘serial’ part:

!ln -s /usr/lib/x86_64-linux-gnu/libhdf5_serial.so /usr/lib/x86_64-linux-gnu/libhdf5.so!ln -s /usr/lib/x86_64-linux-gnu/libhdf5_serial_hl.so /usr/lib/x86_64-linux-gnu/libhdf5_hl.so

We also need to define CPATH environmental variable with build-in magic command %env, so that caffe knows where to look:

#colab version:

%env CPATH="/usr/include/hdf5/serial/"# or for regular regular shell version uncomment:

# export CPATH=$CPATH:/usr/include/hdf5/serial/

Let’s also append /usr/include/hdf/serial/ in our makefile after “# Whatever else you find you need goes here.” comment just to be sure.

Let’s use use sed again, (buy you can also use text editor):

!sed -i 's/\/usr\/local\/include/\/usr\/local\/include \/usr\/include\/hdf5\/serial\//' Makefile.config

make all -j4Now the build may finally be successful.

OpenCV issues

CXX/LD -o .build_release/tools/compute_image_mean.bin

.build_release/lib/libcaffe.so: undefined reference to `cv::imread(cv::String const&, int)'

.build_release/lib/libcaffe.so: undefined reference to `cv::imencode(cv::String const&, cv::_InputArray const&, std::vector<unsigned char, std::allocator<unsigned char> >&, std::vector<int, std::allocator<int> > const&)'

.build_release/lib/libcaffe.so: undefined reference to `cv::imdecode(cv::_InputArray const&, int)'

collect2: error: ld returned 1 exit statusCaffe by default expects OpenCV 2.4.9, but Colab works with OpenCV 4.x.x.

Downgrading is a large pain (usually requires manual compilation of OpenCV, and a lot of work to get dependencies for that). Fortunately, the temporary solution turned up to be very simple: add support for OpenCV 3.x.x, which is actually available and is similar enough to 4.x.x that the build won’t blow up.

!sed -i ‘s/# OPENCV_VERSION := 3/OPENCV_VERSION := 3/’

make all -j4Test if it works by train LeNet network on MNIST dataset.

import caffeIf nothing blows up this is a good sign — pycaffe seems to run ok. Let’s train LeNet on MNIST!

!./data/mnist/get_mnist.shYou may get the following error (although the files may be back there, 2 days ago it failed, now it seems to work):

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz to ./data/MNIST/raw/train-images-idx3-ubyte.gz - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - HTTPError Traceback (most recent call last) in () 2 train_dataset = torchvision.datasets.MNIST( 3 root = './data', train = True, - → 4 transform = transforms_apply, download = True 5 ) 6

11 frames /usr/lib/python3.7/urllib/request.py in http_error_default(self, req, fp, code, msg, hdrs) 647 class HTTPDefaultErrorHandler(BaseHandler): 648 def http_error_default(self, req, fp, code, msg, hdrs): → 649 raise HTTPError(req.full_url, code, msg, hdrs, fp) 650 651 class HTTPRedirectHandler(BaseHandler):

HTTPError: HTTP Error 503: Service UnavailableIf that is the case, use alternative download instead:

!wget www.di.ens.fr/~lelarge/MNIST.tar.gz

!tar -zxvf MNIST.tar.gz

!cp -rv MNIST/raw/* data/mnist/And proceed with:

./examples/mnist/create_mnist.sh

!./examples/mnist/train_lenet.shIf you get one of the following as do I:

sm 70: error == cudaSuccess (209 vs. 0) no kernel image is available for execution on the deviceNVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

Let’s add support for newer GPUs, rebuild and rerun:

!sed -i 's/code=compute_61/code=compute_61 -gencode=arch=compute_70,code=sm_70 -gencode=arch=compute_75,code=sm_75 -gencode=arch=compute_75,code=compute_75/' Makefile.config!make clean

!make all -j

!./examples/mnist/train_lenet.sh

Output similar to the one below indicates that LeNet on MNIST was trained and everything is finally fine!

I0403 19:27:10.815840 4460 solver.cpp:464] Snapshotting to binary proto file examples/mnist/lenet_iter_10000.caffemodel

I0403 19:27:10.823678 4460 sgd_solver.cpp:284] Snapshotting solver state to binary proto file examples/mnist/lenet_iter_10000.solverstate

I0403 19:27:10.829105 4460 solver.cpp:327] Iteration 10000, loss = 0.00240565

I0403 19:27:10.829135 4460 solver.cpp:347] Iteration 10000, Testing net (#0)

I0403 19:27:11.217906 4465 data_layer.cpp:73] Restarting data prefetching from start.

I0403 19:27:11.232770 4460 solver.cpp:414] Test net output #0: accuracy = 0.9909

I0403 19:27:11.232807 4460 solver.cpp:414] Test net output #1: loss = 0.028092 (* 1 = 0.028092 loss)

I0403 19:27:11.232815 4460 solver.cpp:332] Optimization Done.

I0403 19:27:11.232821 4460 caffe.cpp:250] Optimization Done.

Sources:

https://viencoding.com/article/216

https://stackoverflow.com/questions/50560395/how-to-install-cuda-in-google-colab-gpus (installing cuda)

https://viencoding.com/article/216 (sm capabilities)